AMD’s latest AI chip, Instinct Mi325X is designed to directly compete with Nvidia datacenter graphics processors.

AMD announced during a recent conference that the production of MI325X would begin by 2024. This is a major step for the company in its efforts to gain a greater share of the growing AI chip market.

The need for high-performance GPUs has increased as the demand for advanced AI technologies such as OpenAI’s ChatGPT grows.

Lisa Su, AMD’s Chief Executive Officer, stated that the “AI Demand has Continued to Take Off and Exceed Expectations”, signaling an investment climate for AI in various sectors.

Nvidia dominates the market for data centers GPUs, with a share of approximately 90%.

AMD remains committed to increasing its market share in what will be projected as a $500 Billion dollar market by the year 2028.

AMD previously stated that Microsoft and Meta, two tech giants who use its AI GPUs for certain applications, also utilize AMD’s AI GPUs. OpenAI is also said to be using these AI graphics cards for some select applications.

AMD usually sells its GPUs in complete server solutions.

AMD will accelerate the release of its products to compete better with Nvidia. It plans on releasing chips annually to take advantage of AI’s boom.

MI325X is the successor to the MI300X which was released late last year. Future chips such as the MI350 and MI400 are also in development.

It is expected that Nvidia will ship significant quantities of its upcoming Blackwell chip early next year.

AMD’s stock has only risen 20% in the past year, compared to Nvidia, whose share price is up 175%.

Despite all the excitement surrounding AMD’s new AI chip due to Nvidia CUDA, the established programming language that locks developers in Nvidia ecosystem.

AMD has enhanced its ROCm (Real-Time Computing Model) software in order to make the transition for AI developers to AMD accelerators easier.

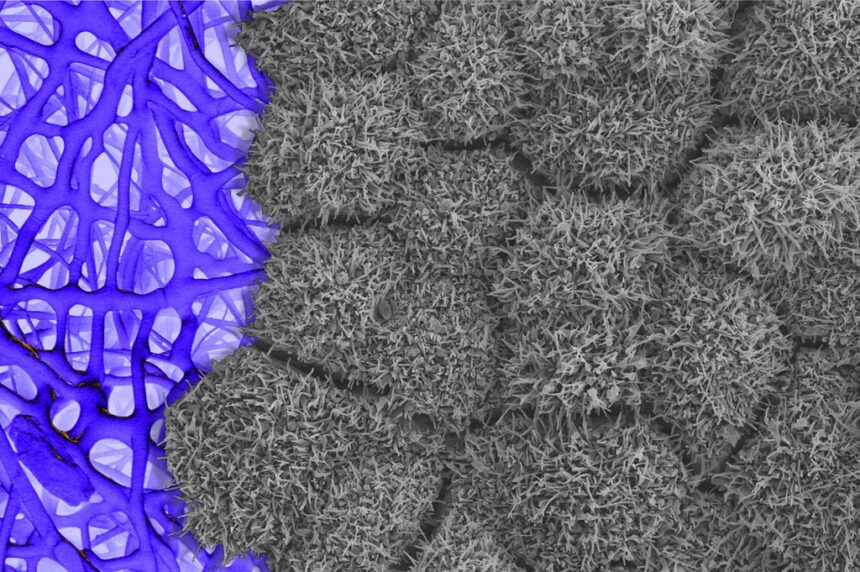

AMD’s AI Accelerators are praised for their ability to handle applications such as content creation, predictive analytics and more. This is thanks to the advanced memory technologies.

Su claims that MI325X delivers up to 40% higher inference performance when Meta’s Llama AI is run than Nvidia H200.

AMD’s main business is still central processors, which are crucial to nearly all servers in the world.

Data center sales in the second quarter of 2018 exceeded $2.8 billion, which is a double-digit increase from last year, even though AI chips only accounted for about $1 billion.

AMD controls about 34% total expenditure on data centre CPUs. It still trails behind Intel’s Xeon.

AMD’s EPYC 5th Gen processors were also introduced at the event to challenge Intel.

New configurations include an 8-core processor priced at $527, a 500-watt 192-core supercomputer chip at $14,813 a unit, and many more.

Su stressed that today’s AI was really all about the CPU, underlining its importance in driving AI workloads as well as data analytics applications.

The post AMD MI325X chip takes on Nvidia Blackwell, may change as the update occurs.

This site is for entertainment only. Click here to read more